TSL Module Configuration

The TSL (Time-Series Library) module is a new PointValueDao implementation for Mango 4.3 which supports reading/writing to various time-series databases via an abstraction layer library written by Radix IoT. Currently, the TSL module supports two different databases:

Aggregation Support

Starting from Mango 4.4, the TSL module supports pre-aggregating point values and storing them so they can be queried using Mango’s “rollup” mechanism. Down-sampling point values in this fashion can reduce the disk usage of the stored values dramatically — e.g., 5s period data aggregated into 15-minute windows reduces samples by a factor of 180.

The TSL module supports storing aggregates for NUMERIC data type points only. It stores count, sum, min, and max; and calculates average as arithmetic mean.

Three new rollup types were added:

| Original Rollup | New Rollup (Aggregates) | Difference |

|---|---|---|

| MINIMUM | MINIMUM_IN_PERIOD | Excludes the start value of each period |

| MAXIMUM | MAXIMUM_IN_PERIOD | Excludes the start value of each period |

| AVERAGE | ARITHMETIC_MEAN | Time-weighted average vs. arithmetic mean |

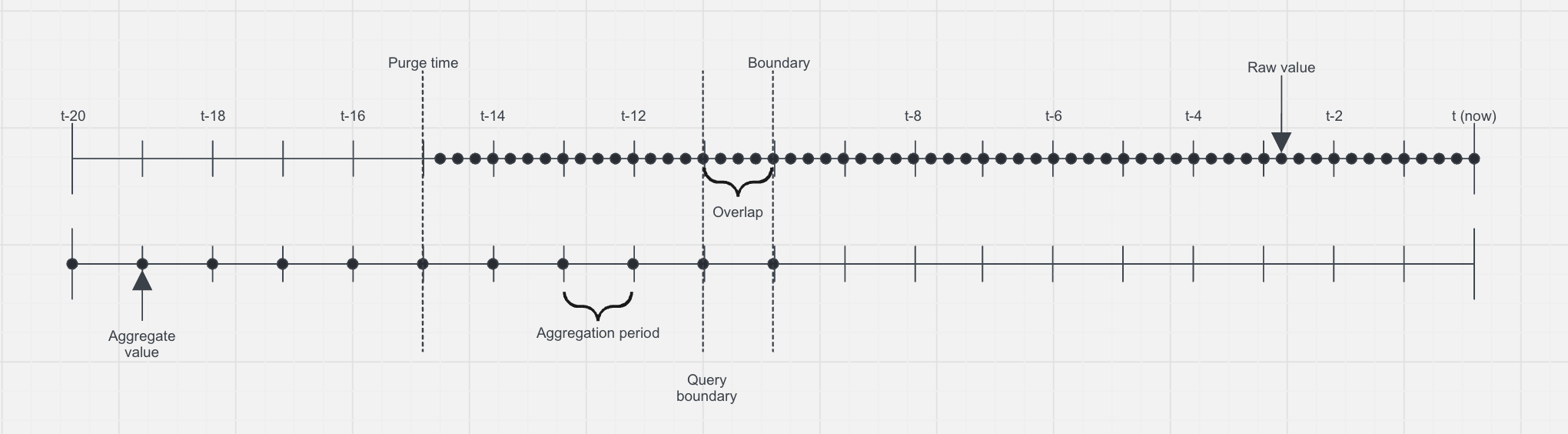

The TSL module will store raw data for a configured amount of time (termed the “boundary” period), after which the raw data is aggregated and stored in a separate aggregates table. Querying of point values across this boundary is seamless, the raw data is aggregated on the fly and concatenated with the pre-aggregated values. Both the raw data and the pre-aggregated data can be resampled to any requested rollup period, e.g. if you have 15 minute aggregates you can still view a hourly rollup - the backend will combine 4x aggregate values into a single hourly aggregate.

Retention Policies

Data retention is configured at the database level. Mango’s Purge System Settings have no effect on TSL data.

Suggested policy:

Numeric Values

- Keep raw data for 3 weeks

- Aggregate 15-minute periods

- Retain aggregates for 10 years

Non-Numeric Values

- Keep raw data for 10 years

- Use “log on change” if needed

- No aggregation is applied

Suggested TimescaleDB Settings

# enable timescale and set to highest priority

db.tsl.timescale.enabled=true

db.tsl.timescale.order=0

# configure database connection parameters

db.tsl.timescale.host=localhost

db.tsl.timescale.port=5432

db.tsl.timescale.db=mango_tsl

db.tsl.timescale.username=mango

db.tsl.timescale.password=password

# max number of records to insert at once (not used for queries)

db.tsl.timescale.chunkSize=16384

# how to handle duplicate values for the same series/timestamp (UPDATE/IGNORE/ERROR)

db.tsl.timescale.conflictMode=UPDATE

# time zone for truncating dates so they align with aggregation period

db.tsl.timescale.aggregation.zone=UTC

# aggregation period (note: if period is not specified, aggregation is disabled)

db.tsl.timescale.aggregation.period=15 MINUTES

# boundary where querying switches between aggregate/raw values

db.tsl.timescale.aggregation.boundary=2 WEEKS

# overlap between aggregate values and raw values

db.tsl.timescale.aggregation.overlap=1 DAYS

Suggested Clickhouse Settings

# enable clickhouse and set to highest priority

db.tsl.clickhouse.enabled=true

db.tsl.clickhouse.order=0

# configure database connection parameters

db.tsl.clickhouse.host=localhost

db.tsl.clickhouse.port=8123

db.tsl.clickhouse.db=default

db.tsl.clickhouse.username=default

db.tsl.clickhouse.password=

# max number of records to insert at once (not used for queries)

db.tsl.clickhouse.chunkSize=16384

# how to handle duplicate values for the same series/timestamp (UPDATE/IGNORE)

db.tsl.clickhouse.conflictMode=UPDATE

# time zone for truncating dates so they align with aggregation period

db.tsl.clickhouse.aggregation.zone=UTC

# aggregation period (note: if period is not specified, aggregation is disabled)

db.tsl.clickhouse.aggregation.period=15 MINUTES

# boundary where querying switches between aggregate/raw values

db.tsl.clickhouse.aggregation.boundary=2 WEEKS

# overlap between aggregate values and raw values

db.tsl.clickhouse.aggregation.overlap=1 DAYS

Setting Clickhouse Retention Policy

Manually configure the raw data table to retain data longer for your desired data types. We suggest that you keep at least 3 weeks of raw data in case the aggregation process fails, this gives a buffer to allow aggregation later before the raw data is deleted. The following command keeps raw data for numeric values for 21 days and raw data for all other data types for 10 years.

ALTER TABLE data MODIFY TTL

toDateTime(time) + INTERVAL 21 DAY DELETE WHERE numeric_value IS NOT NULL,

toDateTime(time) + INTERVAL 10 YEAR DELETE;

If necessary one can configure the aggregated data retention. This only applies to numeric value aggregations.

ALTER TABLE aggregates MODIFY TTL

toDateTime(time) + INTERVAL 10 YEAR DELETE;

How To Set Up and Run ClickHouse TSL on Local Mango

Requirements

- Local Mango instance with

paths.dataset to/opt/mango-data

Docker Installation

Using docker-compose:

services:

clickhouse:

image: clickhouse/clickhouse-server:latest

ports:

- 8123:8123

- 9000:9000

volumes:

- ./ch_data:/var/lib/clickhouse/

- ./ch_logs:/var/log/clickhouse-server/

environment:

- CLICKHOUSE_PASSWORD=password

- CLICKHOUSE_USER=username

Or using docker run:

docker run -d \

-p 8123:8123 -p 9000:9000 \

-v $(realpath ./ch_data):/var/lib/clickhouse/ \

-v $(realpath ./ch_logs):/var/log/clickhouse-server/ \

-e CLICKHOUSE_PASSWORD=password -e CLICKHOUSE_USER=username \

--name some-clickhouse-server-2 --ulimit nofile=262144:262144 clickhouse/clickhouse-server

If using Colima and you receive "operation not permitted" file errors, check:

colima issue #83

Create the Database

docker exec -it radixiot-clickhouse-1 bash

/bin/clickhouse-client

create database radix_tsl;

Configure Mango Properties

# Disable db.nosql

db.nosql.enabled=false

Enable clickhouse tsl

---------- TSL ----------

db.tsl.clickhouse.enabled=true

db.tsl.clickhouse.order=0

db.tsl.clickhouse.host=localhost

db.tsl.clickhouse.port=8123

db.tsl.clickhouse.db=radix_tsl

db.tsl.clickhouse.username=username

db.tsl.clickhouse.password=password

db.tsl.clickhouse.chunkSize=16384

---------- TSL ----------

Set up some defaults if you need to

------ TSL DEFAULTS ------

db.tsl.clickhouse.batchInsert.threadCount=4

db.tsl.clickhouse.conflictMode=UPDATE

db.tsl.clickhouse.configureRetentionPolicy=false

db.tsl.clickhouse.aggregation.zone=UTC

db.tsl.clickhouse.aggregation.period=15 MINUTES

db.tsl.clickhouse.aggregation.boundary=2 WEEKS

db.tsl.clickhouse.aggregation.overlap=1 DAYS

db.tsl.clickhouse.aggregation.configureRetentionPolicy=false

db.tsl.clickhouse.aggregation.retentionPeriod=10 YEARS

db.tsl.clickhouse.socketTimeout=60000

db.tsl.clickhouse.connectionTimeout=60000

------ TSL DEFAULTS ------

Verify Clickhouse is working

Run Mango and check the logs for the string ClickhousePointValueDaoDefinition initialized.

Example:

INFO 2024-03-12T11:31:04,058 (com.infiniteautomation.mango.spring.MangoRuntimeContextConfiguration.pointValueDao:420) - Time series database ClickhousePointValueDaoDefinition initialized

Verify in the database that records are being inserted.

select * from radix_tsl.data;

You should see records being inserted with proper timestamps and values.

Example output:

┌────────────────────time─┬─series_id─┬─multistate_value─┬─numeric_value─┬─text_value─┬─binary_value─┐

│ 2024-03-12 17:28:52.062 │ 1231 │ ᴺᵁᴸᴸ │ 1 │ ᴺᵁᴸᴸ │ ᴺᵁᴸᴸ │

└─────────────────────────┴───────────┴──────────────────┴───────────────┴────────────┴──────────────┘

┌────────────────────time─┬─series_id─┬─multistate_value─┬─numeric_value─┬─text_value─┬─binary_value─┐

│ 2024-03-12 17:31:16.199 │ 1231 │ ᴺᵁᴸᴸ │ 1 │ ᴺᵁᴸᴸ │ ᴺᵁᴸᴸ │

└─────────────────────────┴───────────┴──────────────────┴───────────────┴────────────┴──────────────┘

┌────────────────────time─┬─series_id─┬─multistate_value─┬─numeric_value─┬─text_value─┬─binary_value─┐

│ 2024-03-12 17:29:02.062 │ 1230 │ ᴺᵁᴸᴸ │ 62 │ ᴺᵁᴸᴸ │ ᴺᵁᴸᴸ │

└─────────────────────────┴───────────┴──────────────────┴───────────────┴────────────┴──────────────┘

┌────────────────────time─┬─series_id─┬─multistate_value─┬─numeric_value─┬─text_value─┬─binary_value─┐

│ 2024-03-12 17:31:26.199 │ 1230 │ ᴺᵁᴸᴸ │ 62 │ ᴺᵁᴸᴸ │ ᴺᵁᴸᴸ │

└─────────────────────────┴───────────┴──────────────────┴───────────────┴────────────┴──────────────┘

How To Set Up and Run TimescaleDB TSL on Local Mango

Requirements

- Mango running in IntelliJ and licensed

- Docker installed

Docker Installation

Using docker-compose:

services:

clickhouse:

image: timescale/timescaledb:latest-pg14

ports:

- 5432:5432

volumes:

- </your/data/dir>:/var/lib/postgresql/data

environment:

- POSTGRES_PASSWORD=password

Or using docker run:

docker run -d \

-p 5432:5432 \

-e POSTGRES_PASSWORD=password \

-v </your/data/dir>:/var/lib/postgresql/data \

--name timescaledb timescale/timescaledb:latest-pg14

Connect to TimescaleDB container

docker exec -it timescaledb psql -U postgres

List Installed Extensions

postgres=# \dx

List of installed extensions

Name | Version | Schema | Description

-------------+---------+------------+---------------------------------------------------------------------------------------

plpgsql | 1.0 | pg_catalog | PL/pgSQL procedural language

timescaledb | 2.14.2 | public | Enables scalable inserts and complex queries for time-series data (Community Edition)

(2 rows)

Create TimescaleDB database and connect to it

postgres=# CREATE DATABASE mango_tsl;

postgres=# \c mango_tsl

Mango Properties

# Disable db.nosql

db.nosql.enabled=false

Enable timescale tsl

---------- TSL ----------

enable timescale and set to the highest priority

db.tsl.timescale.enabled=true

db.tsl.timescale.order=0

configure database connection parameters

db.tsl.timescale.host=localhost

db.tsl.timescale.port=5432

db.tsl.timescale.db=mango_tsl

db.tsl.timescale.username=postgres

db.tsl.timescale.password=password

max number of records to insert at once (not used for queries)

db.tsl.timescale.chunkSize=16384

how to handle duplicate values for the same series/timestamp (UPDATE/IGNORE/ERROR)

db.tsl.timescale.conflictMode=UPDATE

time zone for truncating dates, so they align with aggregation period

db.tsl.timescale.aggregation.zone=UTC

aggregation period (note: if period is not specified, aggregation is disabled)

db.tsl.timescale.aggregation.period=15 MINUTES

boundary where querying switches between aggregate/raw values

db.tsl.timescale.aggregation.boundary=2 WEEKS

overlap between aggregate values and raw values

db.tsl.timescale.aggregation.overlap=1 DAYS

---------- TSL ----------

Verify Setup

Run mango and check the logs for the string TimescalePointValueDaoDefinition initialized

INFO 2024-04-05T16:35:23,600 (com.infiniteautomation.mango.spring.MangoRuntimeContextConfiguration.pointValueDao:420) - Time series database TimescalePointValueDaoDefinition initialized

List database relations

These commands connect to the TSL database and confirm that the proper tables are in place.

postgres=# \c mango_tsl

postgres=# \dt

Schema | Name | Type | Owner

--------+-----------------------+-------+----------

public | aggregates | table | postgres

public | flyway_schema_history | table | postgres

public | point_values | table | postgres

(3 rows)

Describe point_values table

This command inspects the structure of the point_values table to ensure that the raw point data is stored in a highly optimized format for time-series queries.

postgres=# \d point_values

Column | Type | Collation | Nullable | Default

------------------+--------------------------+-----------+----------+---------

time | timestamp with time zone | | not null |

series_id | bigint | | not null |

multistate_value | integer | | |

numeric_value | double precision | | |

text_value | text | | |

binary_value | boolean | | |

Indexes:

"point_values_series_id_time_idx" UNIQUE, btree (series_id, "time" DESC)

"point_values_time_idx" btree ("time" DESC)

Triggers:

ts_insert_blocker BEFORE INSERT ON point_values FOR EACH ROW EXECUTE FUNCTION _timescaledb_functions.insert_blocker()

Describe aggregates table

This command inspects the structure of the aggregates table that holds the pre-aggregated numeric data so you can ensure that the rolled up values are being properly stored.

postgres=# \d aggregates

Column | Type | Collation | Nullable | Default

-----------+--------------------------+-----------+----------+---------

time | timestamp with time zone | | not null |

series_id | bigint | | not null |

min | double precision | | |

max | double precision | | |

count | integer | | |

sum | double precision | | |

Indexes:

"aggregates_series_id_time_idx" UNIQUE, btree (series_id, "time" DESC)

"aggregates_time_idx" btree ("time" DESC)

Triggers:

ts_insert_blocker BEFORE INSERT ON aggregates FOR EACH ROW EXECUTE FUNCTION _timescaledb_functions.insert_blocker()

Querying point_values data

This SQL statement allows you to verify that time-series data is being properly stored with a series_ID and the date time/ point value combinations.

mango_tsl=# SELECT * FROM point_values limit 10;

time | series_id | multistate_value | numeric_value | text_value | binary_value

------------------------+-----------+------------------+--------------------+------------+--------------

2024-04-05 00:00:00+00 | 309 | | 50 | |

2024-04-05 00:00:05+00 | 309 | | 50.000182476907646 | |

2024-04-05 00:00:10+00 | 309 | | 49.9663825228253 | |

2024-04-05 00:00:15+00 | 309 | | 50.012480019475234 | |

2024-04-05 00:00:20+00 | 309 | | 49.91455146802638 | |

2024-04-05 00:00:25+00 | 309 | | 49.86211722809043 | |

2024-04-05 00:00:30+00 | 309 | | 49.81930953391918 | |

2024-04-05 00:00:35+00 | 309 | | 49.87720131460692 | |

2024-04-05 00:00:40+00 | 309 | | 49.90686387414849 | |

2024-04-05 00:00:45+00 | 309 | | 49.955001048885684 | |

(10 rows)